Pinot RCE, DataDog, your 2FA codes off the rails

If your web application sends SMSs to your customers, you probably submit the messages via a commercial third-party API. The alternative would be dealing with mobile networks directly, but that would require significantly more engineering resources. Whether you're sending 2FA codes or messages from healthcare providers, this is a sensible decision, but it does expose your data to the vendor's information security practices.

As your due diligence, you can require vendors to adhere to certain compliance frameworks such as SOC II, HIPAA and the rest of the alphabet soup, but in terms of the real world information security practices of their engineers and data scientists it means very little.

I previously published research about Apache Pinot on Doyensec's blog where I dropped a few vulnerabilities, the more serious of which have been fixed.

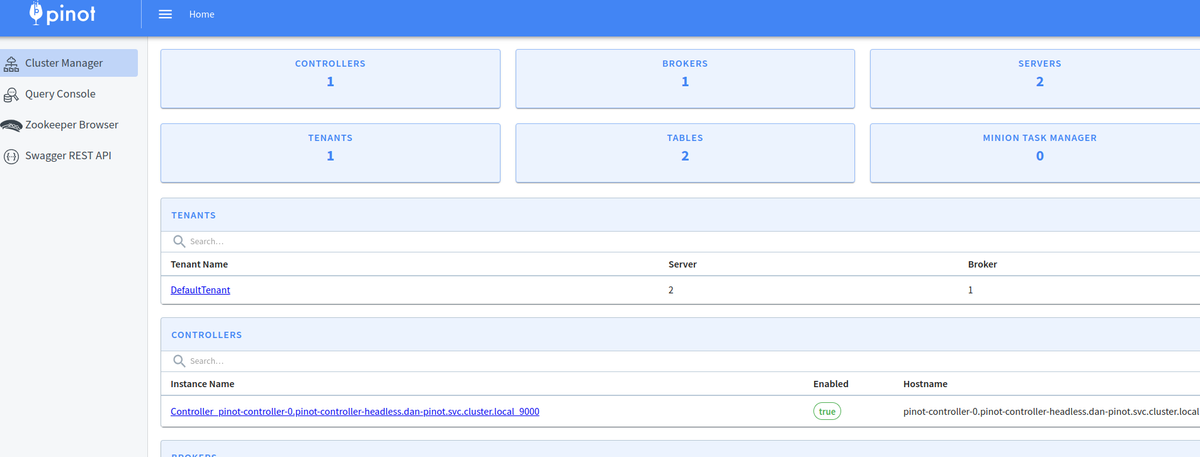

One of the components of Apache Pinot is the Controller. This service has a web interface allowing you to submit SQL queries to the Pinot Broker. It also allows you to configure the tables, settings and ZooKeeper, essentially giving admin access to the database service. What's more, from the Controller you can access an RCE "feature" giving root access to the Server component.

Surely nobody would be foolhardy enough to make such a service accessible on the internet!

Well, I've looked and there aren't many, but in amongst the test servers you do see the odd cryptocurrency exchange. As described in my previous Pinot research article, RCE is not usually exciting for it's own sake on such a containerised platform. The goal instead is to steal temporary cloud credentials. When I find a Controller hosted in Amazon EKS or EC2, I like to see if, instead of being sandboxed from the rest of the organisation, the RCE gives me fun IAM permissions.

The enumeration is the same as described in my BSides talk about Apache YARN / Hadoop clusters.

Until now, I had mostly ended up on boring test or staging accounts. The main culprits for storing all of their clients' production and test environments in a single AWS account are in fact AWS consultant companies.

Permissions for a recently breached target were mostly fine. Appropriate policies were applied so that using AWS credentials exfiltrated via the Pinot RCE, I could only access the staging S3 buckets (not production). Nothing worth reporting to their VDP. When it came to SSM however, I hit the jackpot. Out popped over 2000 SSM parameters. SSM (AWS System Manager) is a cloud-based configuration and secret store. While most parameters were test related, I got lucky with some production secrets:

- a Slack token without read access (write-only)

- a GitHub token with useful permissions

- DataDog application and API keys

The Slack token helped me confirm that the system was in fact owned by C*llR*il:

curl -s https://slack.com/api/auth.test -H 'Authorization: Bearer xoxb-rEdAcTeD' | jq{

"ok": true,

"url": "https://c*llr*il.slack.com/",

"team": "C*llR*il",

"user": "deploy_bot",

"team_id": "rEdAcTeD",

"user_id": "rEdAcTeD",

"bot_id": "rEdAcTeD",

"is_enterprise_install": false

}It turns out that the DataDog keys were the most interesting both because I'd never played with the API before and because of the confidentiality breach it caused.

DataDog API keys give basic access to the organisation but not the majority of the API. Application keys are created by a user and have the full permissions of that user unless further restricted by scopes. Application keys are required for the majority of DataDog API calls.

All apps need to be deployed with some sort of DataDog API key in order to submit logs. In combination with the Application key retrieved from SSM, however, logs could be queried and read. This is not a level of access that you hope an attacker has, but you don't need an all-of-nothing attitude to security. Rather than saying "if someone has access to all the production logs then it's game over," defence-in-depth suggests that by not logging secrets or PII you can limit the damage.

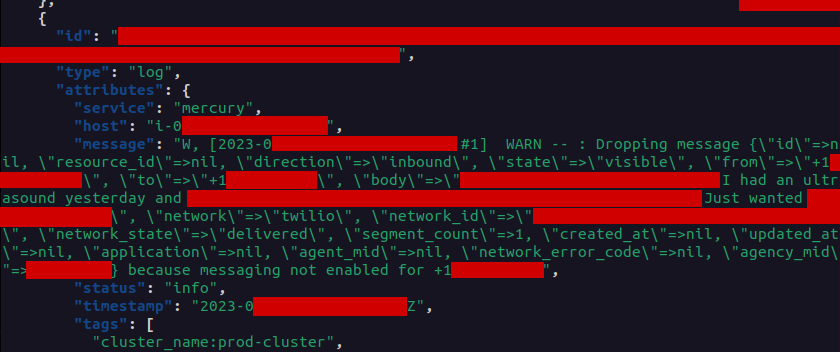

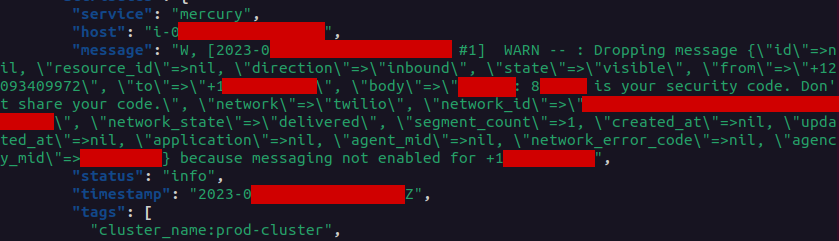

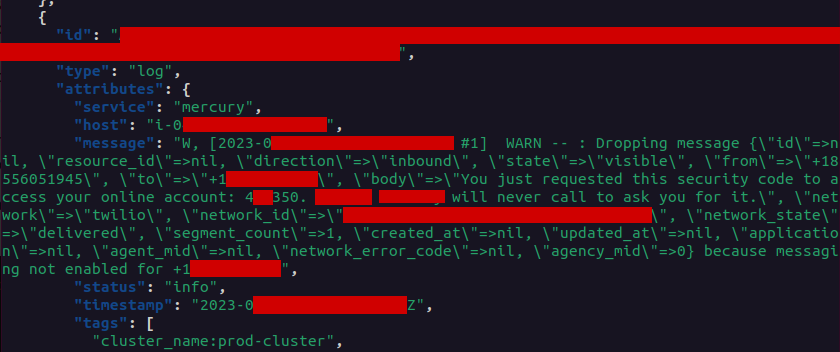

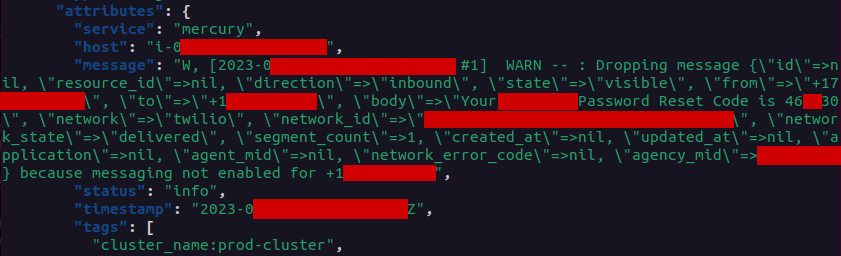

After a very quick poke around I didn't find any evidence of secrets or PII being routinely stored in logs when the application flows along the happy path. When the application encounters errors, however, full SMS messages (metadata and content) are dumped in the logs.

Ideally this would be only in exceptional cases rather than commonly occurring problems. To fix the systems it is important that developers are aware of the errors, but consider whether the entire plaintext message content needs to be available to and searchable by all developers. It feels like overkill to encrypt or worry about logs when attackers can't read them... except I have the DataDog keys so I can.

If the threat of SIM-swapping didn't already make you consider SMS 2FA dead, the following imagined attack scenario should.

Search the logs for recent errors sending bank 2FA codes. Now you have the name of a bank, the phone number of a customer, and the ability to read SMS messages sent by the bank. You can use your knowledge that the user is having difficulty logging in to your advantage in your smishing (SMS phishing) message.

curl "https://api.datadoghq.com/api/v2/logs/events/search" \

-H "Accept: application/json" \

-H "DD-API-KEY: rEdAcTeD" -H "DD-APPLICATION-KEY: rEdAcTeD" \

-H "Content-Type: application/json" \

-d '{"filter":{"from":"now-32d","query":"security code"}}' \

| jq

Smish to get the user's email address, then follow the reset password flow and intercept the SMS code.

Or, if that requires email access, smish for the username and password. This is still not automatic account access, but two factor authentication is reduced to a single factor.

The DataDog API also allows user management, retrieving Notebooks which are used for writing postmortems, and querying Real User Monitoring (RUM). RUM records the URL, activity and browser information for all sessions like a super-powerful Google Analytics but with actual user account information attached. This can be used to sniff UUIDs, user email addresses and generally spy on the activity of all users. AWS events can also be ingested using the Forwarder which is a lambda you deploy in your AWS account. If DataDog Audit Trail is enabled, it should be possible, once alerted, for the security team to spot misuse of the DataDog API by an attacker.

C*llR*il has a Vulnerability Disclosure Program (VDP) allowing security researchers to notify them of breaches. In contrast to a Bug Bounty program, a VDP does not offer any rewards, but also does not prohibit public disclosure upon resolution. Normally when I report such a critical vulnerability to a VDP, the report gets transferred to the company's private beg bounty program and they pay me a bounty. I feel like C*llR*il are stingy bastards for refusing to do that (if such a private program exists). Oh well, I wasn't expecting money when reporting to a VDP anyway and at least they had some sort of security program. The team says the incident is now resolved.

In summary:

- I haven't found any many exciting exposed Pinot controllers, majority are for testing

- Pinot Controller provides RCE which gives AWS credentials

- Staging environments should not have access to production secrets with super-admin permissions

- DataDog API/Application key pairs with read access can be a giant persistent confidentiality breach

- Logging PII and secrets is a no-no

- Hacking an SMS provider can lead to bank account takeovers (customers of customers of the provider)